Apache Airflow Architecture

This lesson details the components that make up Airflow

Apache Airflow is an open-source platform designed to programmatically author, schedule, and monitor workflows.

Its architecture is built to be dynamic, extensible, and scalable, making it ideal for tasks ranging from simple batch jobs to complex data pipelines involving multiple dependencies

Core Components

Airflow’s architecture is centered around four primary components:

- Web Server: A Flask-based web application used for viewing and managing workflows. It provides a user-friendly interface for monitoring workflow executions and their outcomes, editing and managing DAGs (Directed Acyclic Graphs), and managing the Airflow environment.

- Scheduler: The heart of Airflow that schedules workflows based on dependencies and schedules defined in DAGs. The scheduler triggers task instances to run at their scheduled times and manages their execution. It continuously polls for tasks ready to be queued and sends them to the executor for execution.

- Executor: Responsible for executing the tasks that the scheduler sends to it. Airflow supports several types of executors for scaling task execution, including the SequentialExecutor (for development/testing), LocalExecutor (for single machine environments), CeleryExecutor (for distributed execution using Celery), and KubernetesExecutor (for execution in Kubernetes environments).

- Metadata Database: A database that stores state and metadata about all workflows managed by Airflow. It includes information about the structure of workflows (DAGs), their schedules, execution history, and the status of all tasks. The database is essential for Airflow’s operation, allowing it to resume or retry tasks, keep history, and ensure idempotency.

Key Concepts

- DAG (Directed Acyclic Graph): A collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies. A DAG defines the workflow that Airflow will manage and execute.

- Operator: Defines a single task in a workflow. Airflow comes with many predefined operators that can be used to perform common tasks, such as running a Python function, executing a bash script, or transferring data between systems.

- Task: An instance of an operator. When a DAG runs, Airflow creates tasks that represent instances of operators; these tasks then can be executed.

- Task Instance: A specific run of a task, characterized by a point in time (execution date) and a related DAG.

Execution Flow

- DAG Definition: Developers define workflows as DAGs, specifying tasks and their dependencies using Python code.

- DAG Scheduling: The scheduler reads the DAG definitions and schedules the tasks based on their start times, dependencies, and retries.

- Task Execution: The executor picks up tasks scheduled by the scheduler and executes them. The choice of executor depends on the environment and the scalability needs of the tasks.

- Monitoring and Management: Throughout execution, users can monitor and manage workflows using the Airflow web server. Upon completion, task statuses are updated in the metadata database, allowing users to review the execution history and logs.

Scalability and Extensibility

Airflow’s architecture supports scaling through its pluggable executor model, which allows it to run tasks on a variety of backend systems.

Its use of a central metadata database enables distributed execution and monitoring. Airflow’s design also emphasizes extensibility, allowing developers to define custom operators, executors, and hooks to integrate with external systems, making it a flexible tool for building complex data pipelines.

We’ll cover the following

In this lesson, we’ll explore the architecture and components that make up Airflow. It primarily consists of the following entities:

- Scheduler

- Web server

- Database

- Executor

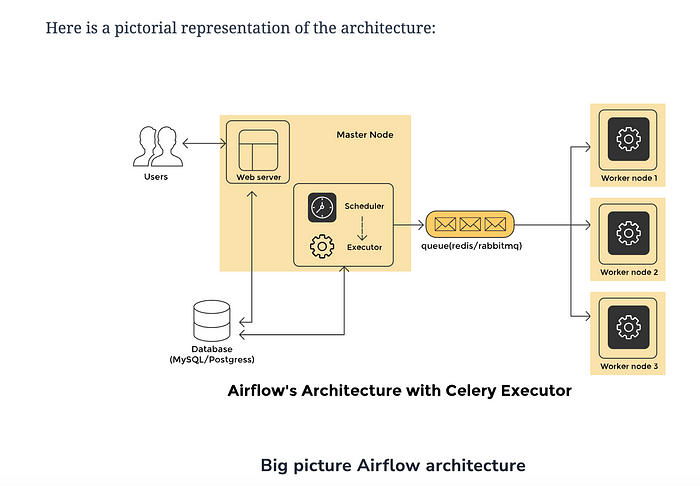

Here is a pictorial representation of the architecture:

Big picture Airflow architecture

These four pieces work together to make up a robust and scalable workflow scheduling platform. We’ll discuss them in detail below.

Scheduler

The scheduler is responsible for monitoring all DAGs and the tasks within them. When dependencies for a task are met, the scheduler triggers the task. Under the hood, the scheduler periodically inspects active tasks to trigger.

Web server

The web server is Airflow’s UI. It displays the status of the jobs and allows the user to interact with the databases as well as read log files from a remote file store, such as S3, Google Cloud Storage, Azure blobs, etc.

Executor

The executor determines how the work gets done. There are different kinds of executors that can be plugged in for different behaviors and use cases. The SequentialExecutor is the default executor that runs a single task at any given time and is incapable of running tasks in parallel. It is useful for a test environment or when debugging deeper Airflow bugs. Other examples of executors include CeleryExecutor and LocalExecutor.

- The

LocalExecutorsupports parallelism and hyperthreading and is a good fit for running Airflow on a local machine or a single node. The Airflow installation for this course uses theLocalExecutor. - The

CeleryExecutoris the preferred method to run a distributed Airflow cluster. It requires Redis, RabbitMq, or another message queue system to coordinate tasks between workers. - The

KubernetesExecutorcalls the Kubernetes API to create a temporary pod for each task instance to run. Users can pass in custom configurations for each of their tasks.

Database

The state of the DAGs and their constituent tasks needs to be saved in a database so that the scheduler remembers metadata information, such as the last run of a task, and the web server can retrieve this information for an end user. Airflow uses SQLAlchemy and Object Relational Mapping (ORM), written in Python, to connect to the metadata database. Any database supported by SQLAlchemy can be used to store all the Airflow metadata. Configurations, connections, user information, roles, policies, and even key-value pair variables are stored in the metadata database. The scheduler parses all the DAGs and stores relevant metadata, such as schedule intervals, statistics from each run, and their task instances.

Working

- Airflow parses all the DAGs in the background at a specific period. The default period is set using the

processor_poll_intervalconfig, which is, by default, equal to one second. - Once a DAG file is parsed, DAG runs are created based on the scheduling parameters. Task instances are instantiated for tasks that need to be executed, and their status is set to

SCHEDULEDin the metadata database. Since the parsing takes place periodically, any top-level code, i.e., code written in global scope in a DAG file, will execute when the scheduler parses it. This slows down the scheduler’s DAG parsing, resulting in increased usage of memory and CPU. Therefore, caution is recommended when writing code in the global scope. - The scheduler is responsible for querying the database, retrieving the tasks in the

SCHEDULEDstate, and distributing them to the executors. The state for the task is changed toQUEUED. - The

QUEUEDtasks are drained from the queue by the workers and executed. The task status is changed toRUNNING. - When a task finishes, the worker running it marks it either failed or finished. The scheduler then updates the final status in the metadata database.

Airflow.cfg

Airflow comes with lots of knobs and levers that can be tweaked to extract the desired performance from an Airflow cluster. For instance, the processor_poll_interval config value, as previously discussed, can change the frequency which Airflow uses to parse all the DAGs in the background. Another config value, scheduler_heartbeat_sec, controls how frequently the Airflow scheduler should attempt to look for new tasks to run. If set to fewer heartbeat seconds, the Airflow scheduler will check more frequently to trigger any new tasks, placing more pressure on the metadata database. Yet another config, job_heartbeat_sec, determines the frequency with which task instances listen for external kill signals, e.g., when using the CLI or the UI to clear a task. You can find a detailed configuration reference here.